400 606 5609

Experience DEMO

400 606 5609

Experience DEMO

400 606 5609

Experience DEMO

400 606 5609

Experience DEMO

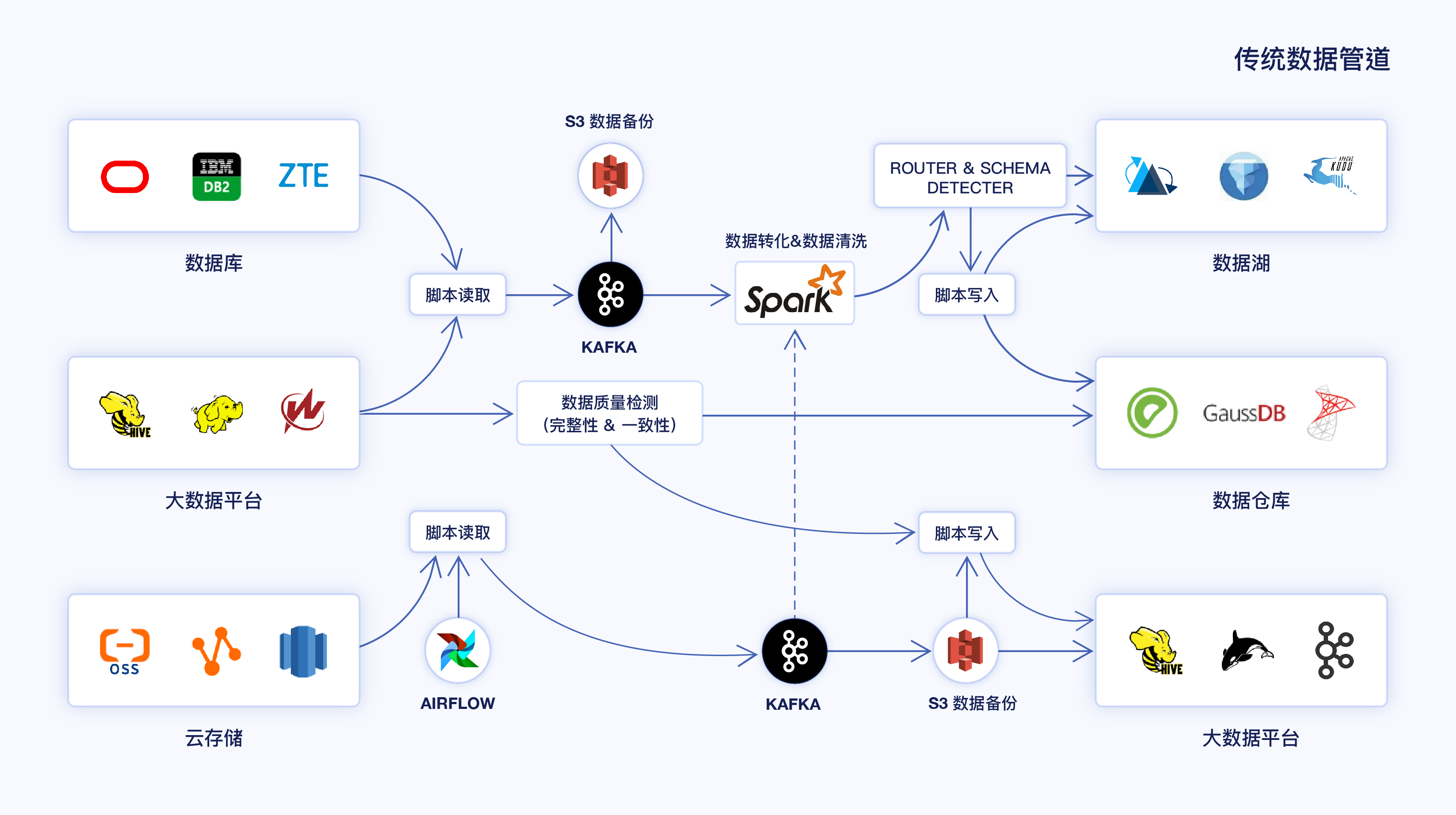

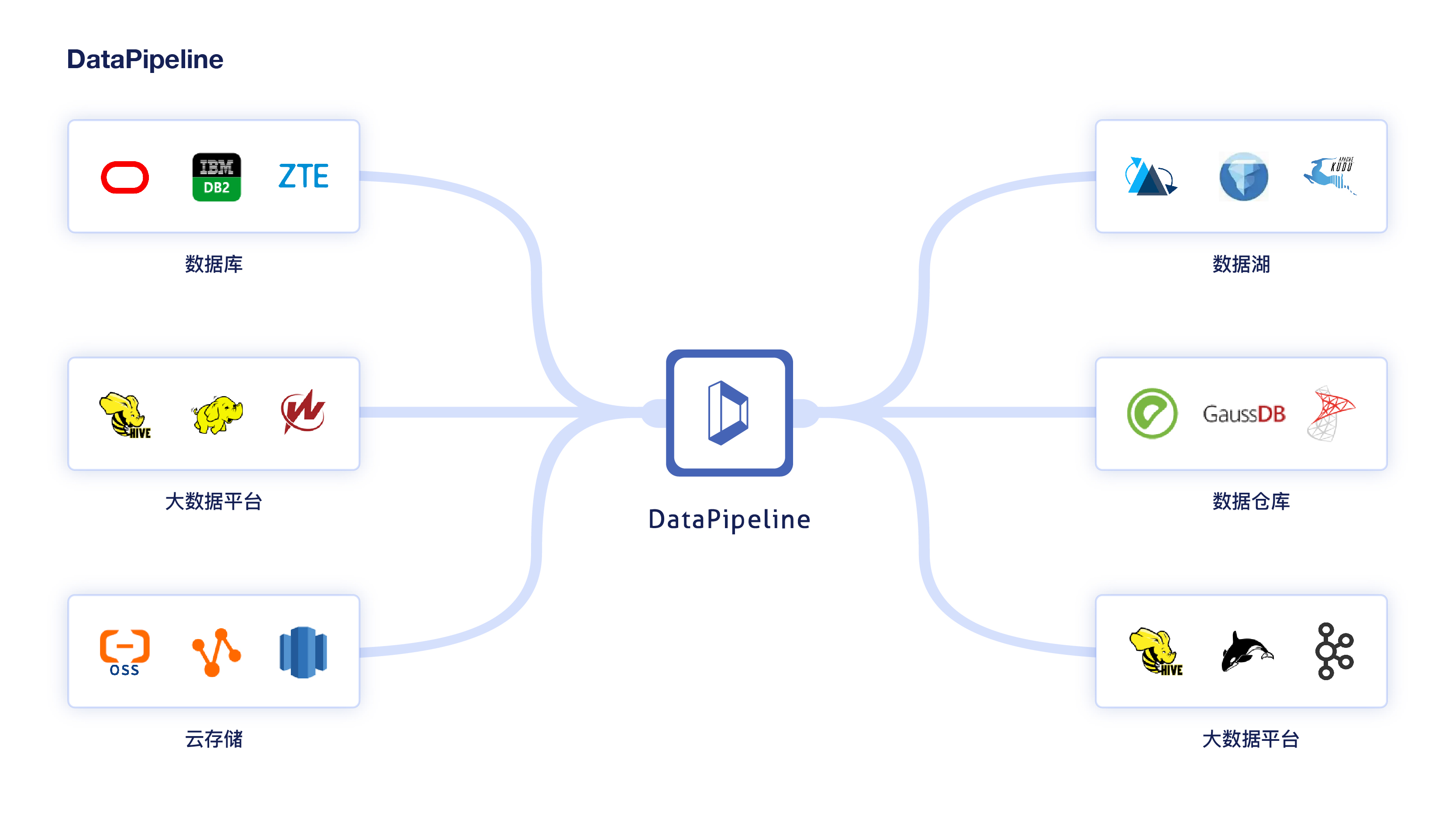

Integrated data fusion platform for batch flow

Adopting Log-based Change Data Capture technology, it supports rich, automated and accurate semantic mapping construction between heterogeneous data, and meets both real-time and batch data processing. It can realize accurate incremental data capture from Oracle, IBM DB2, MySQL, MS SQL Server, PostgreSQL, GoldenDB, TDSQL, OceanBase and other databases. The platform has six major features: full data, fast transmission, strong collaboration, more agile, extremely stable and easy maintenance.

Support various types of data nodes such as relational databases, NoSQL databases, domestic databases, data warehouses, big data platforms, cloud storage, APIs, etc. And it can also customize data nodes.

Provide TB-level throughput and sub-second low latency capabilities for incremental data processing for different data node types, accelerating data flow in various enterprise scenarios.

Layered management to reduce costs and increase efficiency

Adopting the layered management model of “data node registration, data link configuration, data task construction and system resource allocation”, the construction cycle of the enterprise-level platform is reduced from three to six months to one week.

Provides more than ten types of advanced configurations in two categories: restriction and policy configurations, including flexible data object mapping relationships, reducing the development and delivery time of data fusion tasks from 2 weeks to 5 minutes.

Adopting distributed architecture, all components support high availability and provide rich fault-tolerance strategies to cope with upstream and downstream structural changes, data errors, network failures and other emergencies, which can ensure the system's business continuity requirements.

Equipped with a four-level monitoring system for containers, applications, threads and services, the panoramic cockpit guards the stable operation of tasks. Automated operation and maintenance system, flexible capacity expansion and contraction, and reasonable management and allocation of system resources.

Break down the integration and collaboration barriers of siloed and project-based systems. The platform helps customers build diverse, end-to-end data pipelines across heterogeneous systems, delivering real-time and accurate data changes.

DataPipeline has taken root in the financial industry, which has extremely high standards for security, stability, and performance, and continues to blossom in various industries.

DataPipeline has successfully served numerous Global and China Fortune 500 companies across industries such as finance, telecommunications, energy, manufacturing, retail, and real estate.View More Cases>>

Following the DataOps philosophy, our products are designed to enable enterprises to continuously build data pipelines and deliver data as quickly and flexibly as delivering applications.

With a deeply self-developed infrastructure, we define the foundational systems for a new paradigm of data management, positioning ourselves as a core provider of world-class data architecture components.

The best practices in the field of new data infrastructure have been recognized by hundreds of clients, including many Fortune 500 companies.

A comprehensive and trustworthy full-stack product and technology offering; certified by authoritative compliance standards; equipped with a robust security and operations system; and highly compatible with domestic software and hardware.

Complete service system, 7 * 24 hours service guarantee; radiation of the country's professional technical support network, can be near the rapid response.